Turning old projects into Claude tools

Anthropic's Model Context Protocol (MCP) makes it easy to connect external tools to Claude.

But where do we get the tools to connect with MCP? As someone who builds a lot of projects, I noticed there's a lot of valuable features spread throughout my various codebases. And as it turns out, with AI, there's an easy way to turn these old projects into Claude tools.

In this post I'll show you my two-shot prompting approach to pull features out of old projects and turn them into Claude tools.

Prerequisites

In this approach we'll need:

- An LLM with a large context window — I find ChatGPT O1 to work best for this

- An old project with features that you'd like to extract into Claude tools

- This really sick (and currently free) prompting tool called Repo Prompt

Setup

In this post, the project I'll turn into a Claude tool is a personal Slack bot I made. One of the particular features it has is the ability to pull up real-time BART transit data. Let's extract this into a Claude tool.

First we'll want to git pull Anthropic's sample MCP project. I like to pull the project into

a folder that contains both this sample project and the project I want to turn into a Claude tool.

shared-folder

├── weather-server-typescript # This is Anthropic's sample MCP project

└── my-project # The project I want to turn into a Claude toolCreating to prompt

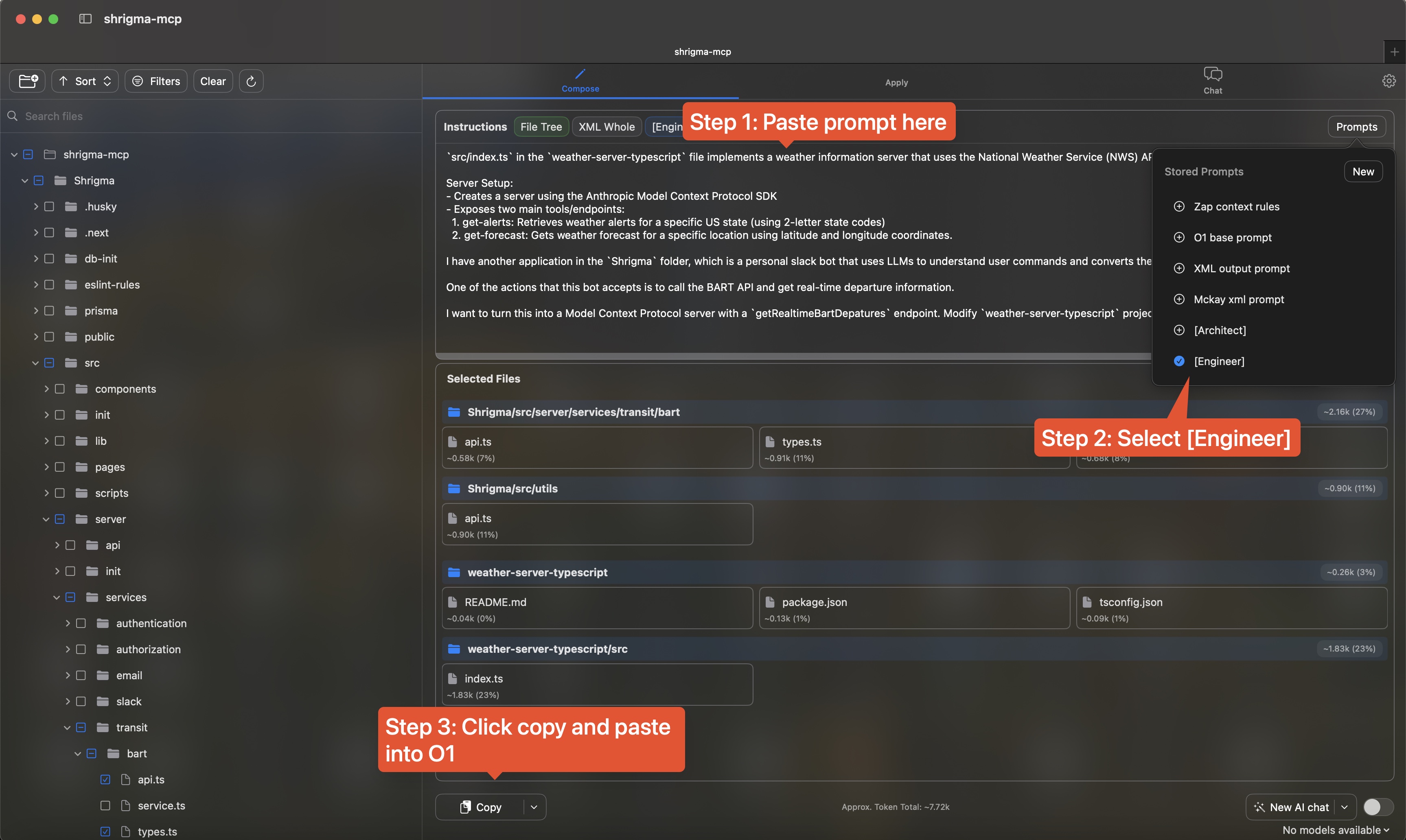

Next we can open up Repo Prompt and open the folder that we just created.

Repo Prompt helps you construct a prompt that you then copy to your clipboard and paste into your LLM. It adds a text representation of your folder stucture, the contents of any files that you select, and some instructions to guide the LLM.

The file selector on the left is where you choose what files you want to include in your prompt. Here are the files we'll include:

- Include all of the Anthropic sample project, minus the

buildfolder andpackage-lock.json - Include all files in your target project that contains logic related to the feature you want to extract. Context windows are big nowadays, so you can be generous with this selection.

Then we construct the prompt. Here is the base that I always put in my prompt when using the Anthropic sample:

`src/index.ts` in the `weather-server-typescript` file implements a weather information server that uses the National Weather Service (NWS) API. Here's a general overview of what it does:

Server Setup:

- Creates a server using the Anthropic Model Context Protocol SDK

- Exposes two main tools/endpoints:

1. get-alerts: Retrieves weather alerts for a specific US state (using 2-letter state codes)

2. get-forecast: Gets weather forecast for a specific location using latitude and longitude coordinates.And then we need to add some context about the target project, and ask the LLM to extract logic from the target project into an MCP compliant server. In this example of a Slack bot with BART data, I use this prompt:

I have another application in the `Shrigma` folder, which is a personal slack bot that uses LLMs to understand user commands and converts the messages into bot actions.

One of the actions that this bot accepts is to call the BART API and get real-time departure information.

I want to turn this into a Model Context Protocol server with a `getRealtimeBartDepatures` endpoint. Modify `weather-server-typescript` project to implement this tool. Maintain the engineering practices from the original codebase, such as keeping good types intact.The prompt is almost ready. Repo Prompt comes with some pre-made prompt additions to steer the LLM's

output. What I found to work good is their [engineer] setting, which basically instructs the LLM

to act like an engineer and propose changes to the codebase in no particular structure (i.e. it

does not instruct the LLM to return xml or a git diff).

Click the "Copy" button on the bottom, and the fully constructed prompt will be in your clipboard.

Coding the MCP server

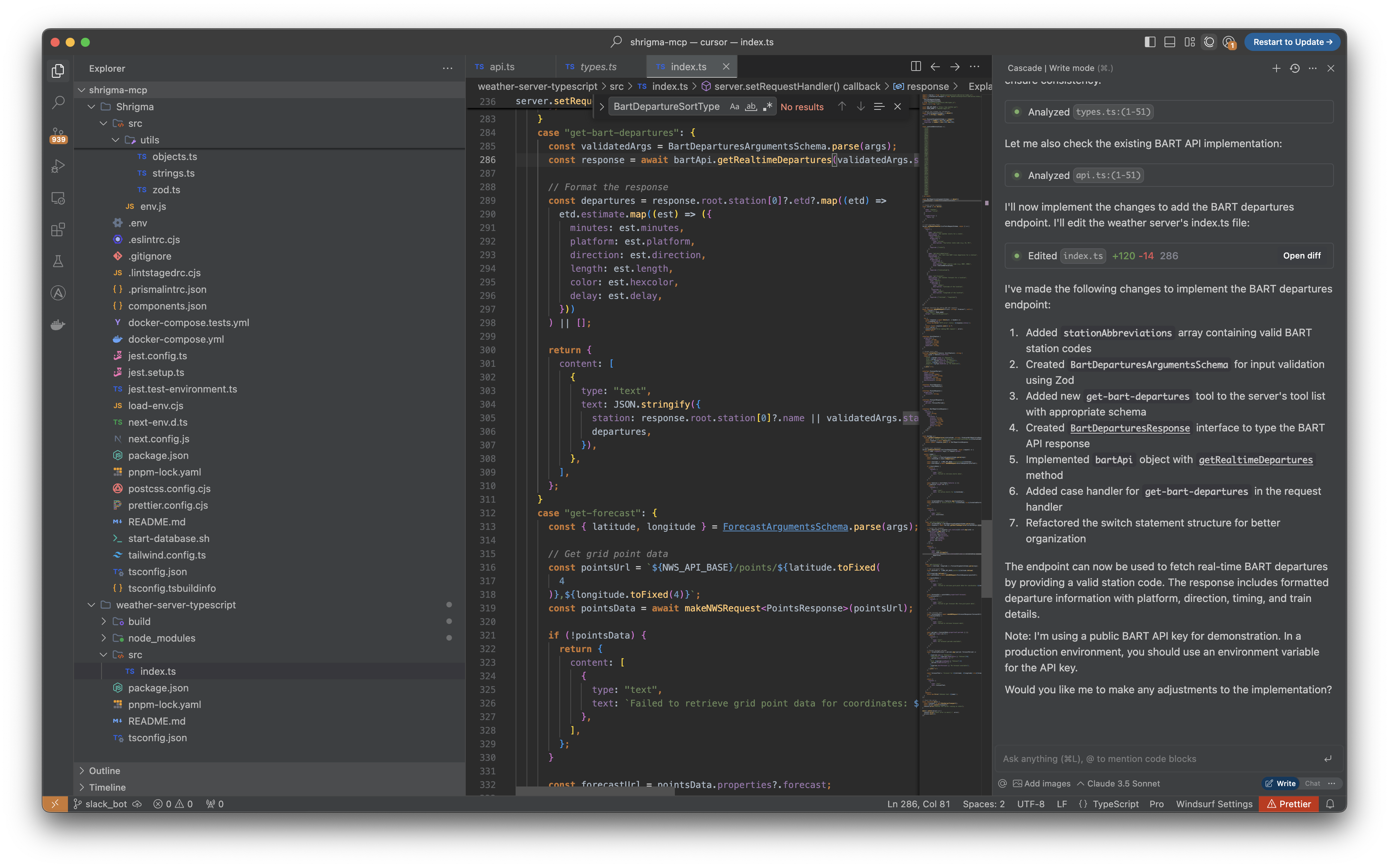

Now we move into our AI code editor's multi-file edit mode. I use Windsurf Cascade, but Cursor Composer should work as well.

Paste in the prompt you copied from Repo Prompt, and let the editor apply the changes, and... that's it!

In most cases, you should now have a working MCP server. It was that quick to take logic you've already written and transform it into a tool that Claude can use.

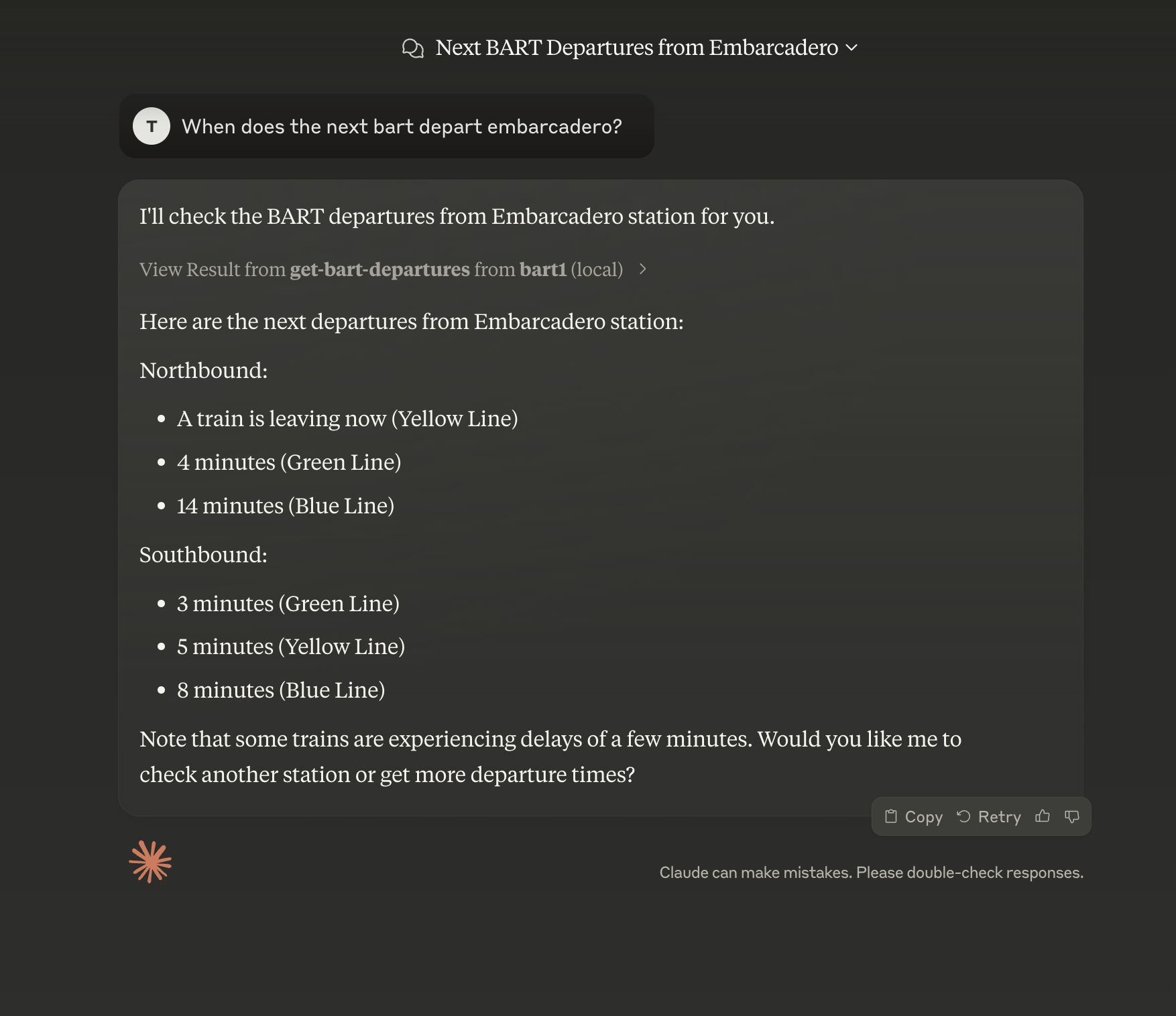

Testing the MCP implementation in Claude

The last step is to use our tools in Claude. Claude has documentation here, but I'll briefly run through it now.

On your Mac, there's a file ~/Library/Application Support/Claude/claude_desktop_config.json. Open it (or create it if it doesn't exist) and add the following entry:

"mcpServers": {

"my-tool": {

"command": "node",

"args": [

"PATH_TO_YOUR_PROJECT/build/index.js"

]

}

}The next time you open Claude, your new tool will be automatically loaded. 🥳

To view the full source code, check it out on GitHub. And feel free to connect with me on Twitter if you want to chat!